Diamond

|

Note If you wish to use the information provided in this page, please reference as follows: Popper, R. (2008) Foresight Methodology, in Georghiou, L., Cassingena, J., Keenan, M., Miles, I. and Popper, R., The Handbook of Technology Foresight: Concepts and Practice, Edward Elgar, Cheltenham, pp. 44-88. |

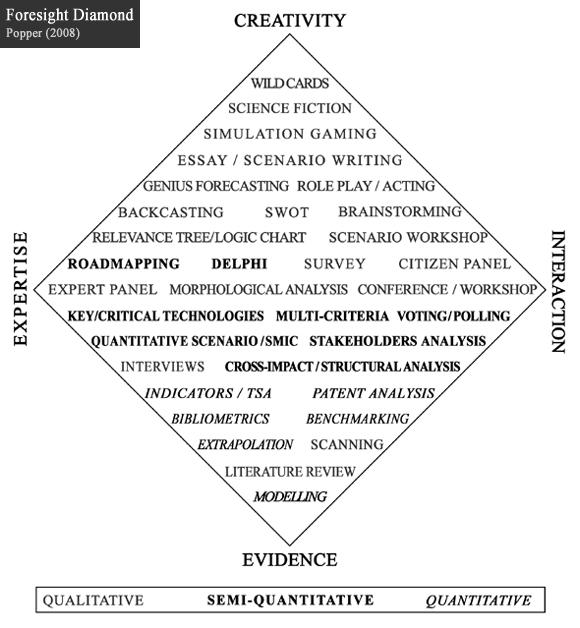

At the heart of my professional endeavours lies the Foresight Diamond Framework, a conceptual model I developed in 2008 to structure foresight methods based on their primary knowledge sources: creativity, expertise, interaction, and evidence. This framework, detailed within the broader context of foresight methodology in “The Handbook of Technology Foresight”, was born from a collaborative and bottom-up approach to understanding the multifaceted nature of foresight practices.

The genesis of the Foresight Diamond was inspired by the realisation that effective foresight cannot lean on a singular type of knowledge. Each corner of the Diamond — creativity, expertise, interaction, and evidence — plays a crucial role in painting a comprehensive picture of the future. This nuanced approach acknowledges that while methods might predominantly draw from one knowledge source, they are invariably enriched by the others. For instance, creativity-based methods, while grounded in the imaginative and original thinking of individuals and groups, gain depth and context when intertwined with expertise, evidence, and interactive discourse.

Developing this framework was a journey filled with action research and learning, a process that underscored the wisdom in Albert Einstein’s reverence for imagination and Arthur C. Clarke’s cautionary words on the limitations of expertise. It was a vivid reminder of the dynamic interplay between the imaginative leaps afforded by creativity and the grounded insights offered by expertise. Similarly, the framework’s emphasis on interaction-based methods reflects a commitment to inclusivity and the democratic ethos of participation, recognising that foresight is most robust when it engages a diversity of voices and perspectives.

The evidence-based corner of the Diamond, meanwhile, serves as a grounding force, reminding us of the importance of reliable data and rigorous analysis in understanding and forecasting phenomena. Yet, as we navigate through the vast landscapes of information and statistics, the cautionary note attributed to Benjamin Disraeli by Mark Twain serves as a constant reminder to approach data with discernment, aware of its potential to mislead if not carefully contextualised and interpreted.

The Foresight Diamond also acknowledges the transformative impact of information technology on foresight practices, from enhancing participatory processes to enabling more sophisticated data analysis. This aspect of the framework reflects an ongoing exploration of how tools and technologies can augment our ability to generate, analyse, and communicate foresight insights, while also highlighting the prerequisites for their effective use.

Reflecting on the implementation and adaptation of the Foresight Diamond across various projects and contexts, I am continually reminded of the framework’s foundational principle: there is no one-size-fits-all approach in foresight. The richness of this field lies in its flexibility and adaptability, encouraging practitioners to tailor their methodological approaches to the unique contours of each project. The journey of developing and applying the Foresight Diamond has been a profound learning experience, illuminating the diverse pathways through which we can envision and shape the future.

In sharing this reflection, I hope to convey my appreciation for the collaborative spirit and intellectual curiosity that have guided the development of the Foresight Diamond Framework.